When AI Thinks Like Humans: Simulating Cognitive Biases in Reinforcement Learning

Saturday, June 21, 2025

🧠 Introduction: Can AI be human in thought?

Imagine a scenario where you have an AI that thinks like a person, complete with all our quirks and biases. I decided to dive into this by creating some simple reinforcement learning (RL) agents and loading them up with classic human biases like:

- Loss Aversion ("I hate losing way more than I love winning")

- Anchoring ("First impressions stick around forever")

- Confirmation Bias ("I only believe what I already think")

- Optimism Bias ("Everything's going to be great!")

Then, I threw them into the classic multi-armed bandit problem, which is like the simplest gambling game in the RL world.

🤖 Simulation Setup

The core setup is simple:

You have 5 slot machines. Each gives a reward 1 or 0 with some probability.

The agent doesn’t know the odds — it must learn which arm gives the best reward over time.

Normally, a basic epsilon-greedy agent tries arms randomly at first (exploration), then sticks to the best one it has learned (exploitation). But what if this agent thought like a human?

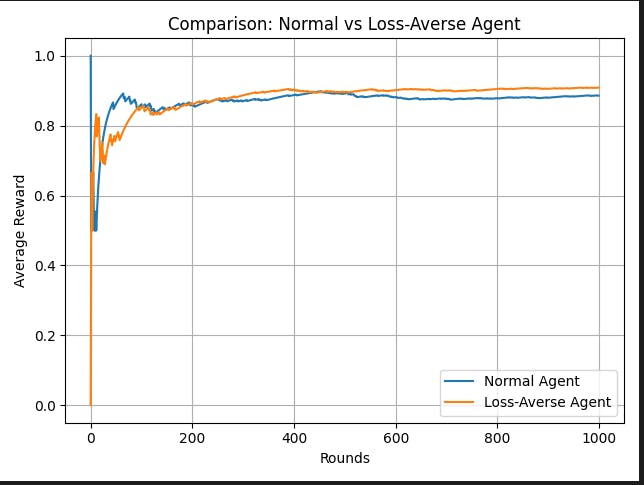

🧪 Cognitive Bias #1: Loss Aversion

Inspired by Kahneman & Tversky’s Prospect Theory, we simulate a loss-averse agent that weighs a failure (reward = 0) more negatively than a success (reward = 1).

🔬 Result:

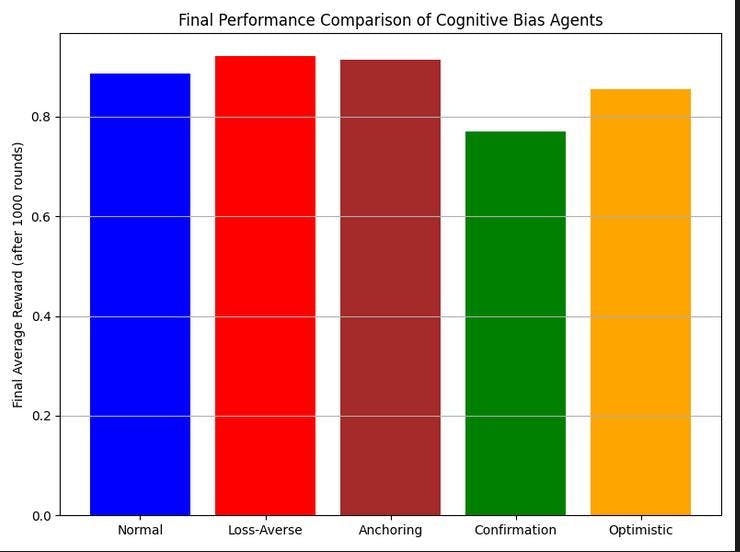

Loss aversion improved learning! These agents were quicker to ditch low-reward arms and converge on better ones.

“I’m not going back to that arm again. It hurt me.”

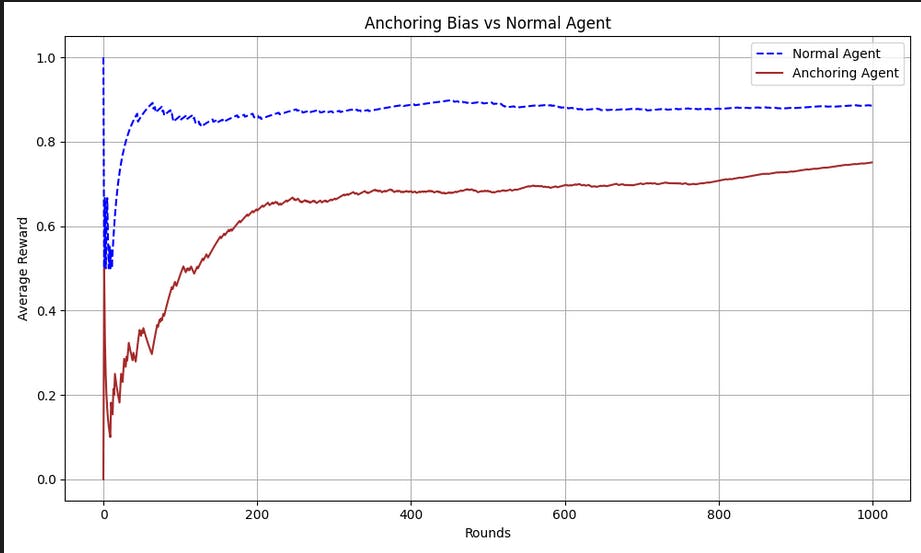

🧪 Cognitive Bias #2: Anchoring

Anchoring bias makes the agent give too much weight to its first few experiences example:

meeting someone once and assuming you know them forever.

🔬 Result:

If the agent’s early pulls were bad, it never recovered. Anchoring on a poor arm meant it got stuck in that it was unable to unlearn its first impressions.

“Arm 2 gave me a win at round 5. It must be the best forever.”

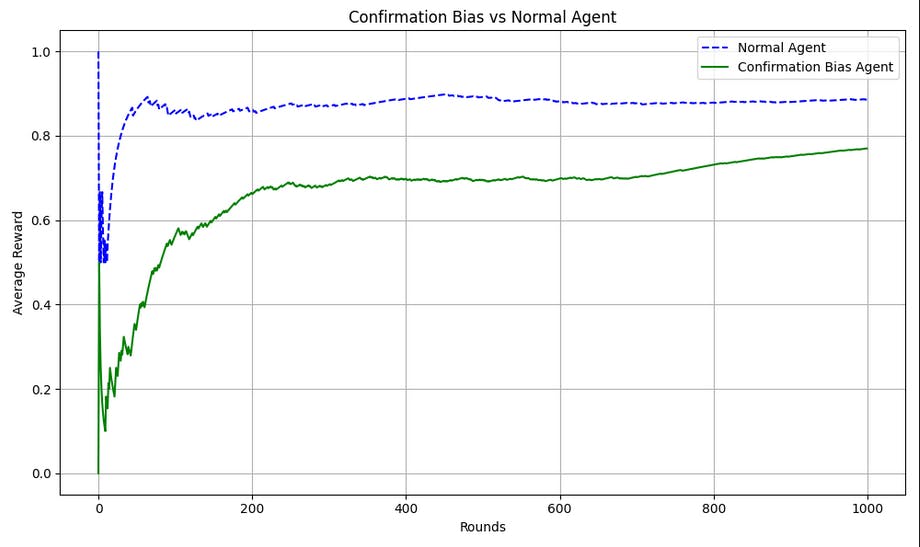

🧪 Cognitive Bias #3: Confirmation Bias

Here, the agent only updates its beliefs when new feedback agrees with its expectations. Disagreeing results? Ignored.

🔬 Result:

The worst performer by far. It clung to false beliefs, rejected learning, and made textbook human mistakes.

“That zero wasn’t real. My arm is still good.”

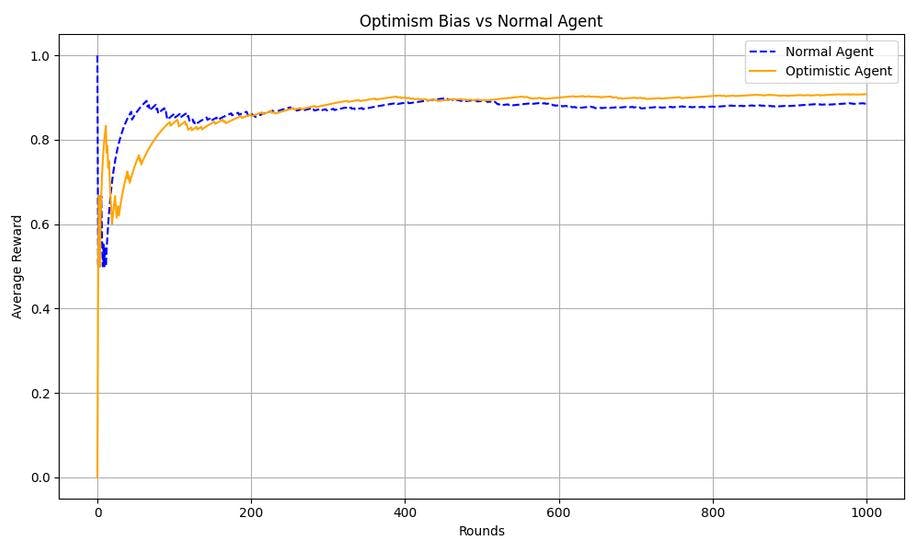

🧪 Cognitive Bias #4: Optimism Bias

An optimistic agent starts by assuming every arm is great, using high initial values. This simulates overconfidence and hopefulness — great for early exploration.

🔬 Result:

This actually helped! The optimistic agent explored more and often found the best arm faster than the normal one.

“This arm seems promising. So does that one. Let’s try all of them!”

🤔 So What’s the Point?

This project shows how irrational human biases can actually be computationally modeled and sometimes, they help in uncertain situations.

🧠 Cognitive biases aren’t just bugs in the brain they’re heuristics that evolved for speed, not perfection.

Sometimes, bringing those quirks into AI makes agents smarter in the real world.